EdbShowAlg_N3 Class Reference

#include <EdbShowAlg_NN.h>

Inheritance diagram for EdbShowAlg_N3:

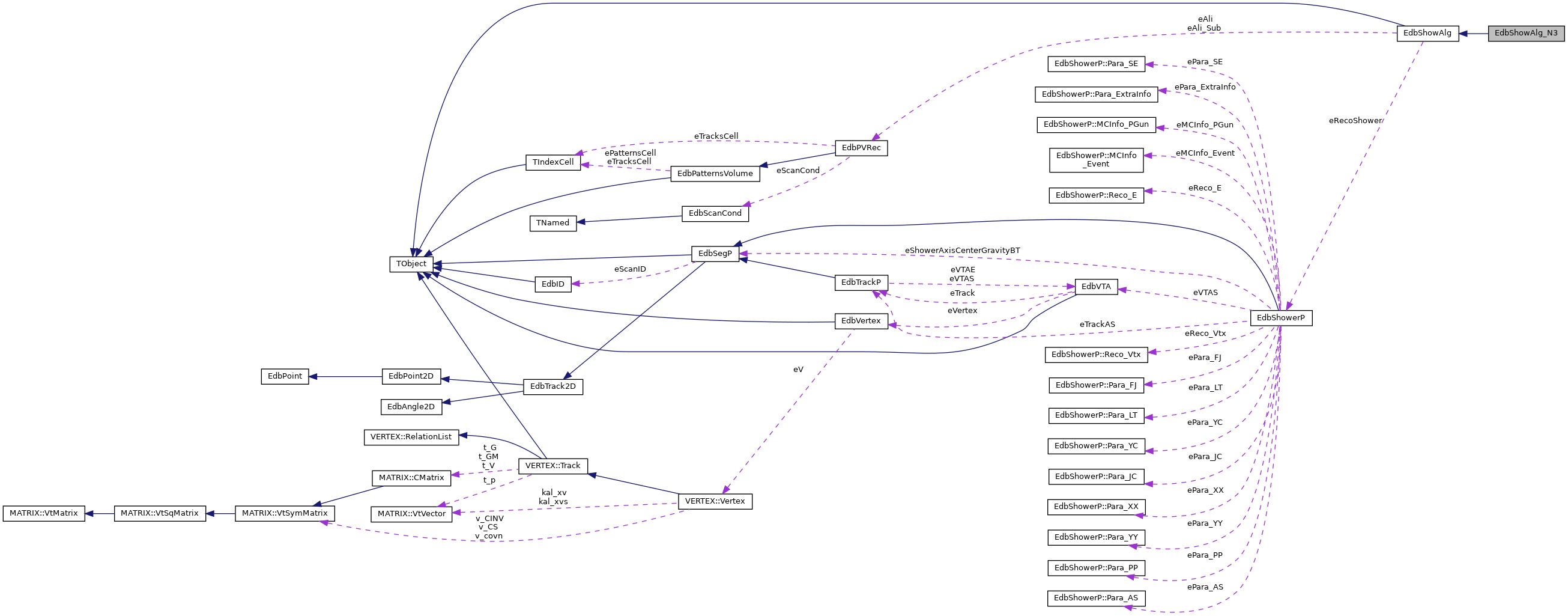

Collaboration diagram for EdbShowAlg_N3:

Public Member Functions | |

| ClassDef (EdbShowAlg_N3, 1) | |

| TMultiLayerPerceptron * | Create_NN_ALG_MLP (TTree *inputtree, Int_t inputneurons) |

| void | CreateANNTree () |

| EdbShowAlg_N3 (Bool_t ANN_DoTrain) | |

| void | Execute () |

| void | Finalize () |

| TString | GetWeightFileString () |

| void | Init () |

| void | Initialize () |

| void | LoadANNWeights () |

| void | LoadANNWeights (TMultiLayerPerceptron *TMlpANN, TString WeightFileString) |

| void | Print () |

| void | SetANNWeightString () |

| void | SetWeightFileString (TString WeightFileString) |

| virtual | ~EdbShowAlg_N3 () |

Public Member Functions inherited from EdbShowAlg Public Member Functions inherited from EdbShowAlg | |

| void | AddRecoShowerArray (EdbShowerP *shower) |

| ClassDef (EdbShowAlg, 1) | |

| Double_t | DeltaR_NoPropagation (EdbSegP *s, EdbSegP *stest) |

| Double_t | DeltaR_WithoutPropagation (EdbSegP *s, EdbSegP *stest) |

| Double_t | DeltaR_WithPropagation (EdbSegP *s, EdbSegP *stest) |

| Double_t | DeltaTheta (EdbSegP *s1, EdbSegP *s2) |

| Double_t | DeltaThetaComponentwise (EdbSegP *s1, EdbSegP *s2) |

| Double_t | DeltaThetaSingleAngles (EdbSegP *s1, EdbSegP *s2) |

| EdbShowAlg () | |

| EdbShowAlg (TString AlgName, Int_t AlgValue) | |

| virtual void | Execute () |

| virtual void | Finalize () |

| TString | GetAlgName () const |

| Int_t | GetAlgValue () const |

| Double_t | GetMinimumDist (EdbSegP *seg1, EdbSegP *seg2) |

| TObjArray * | GetRecoShowerArray () const |

| Int_t | GetRecoShowerArrayN () const |

| EdbShowerP * | GetShower (Int_t i) const |

| Double_t | GetSpatialDist (EdbSegP *s1, EdbSegP *s2) |

| void | Help () |

| virtual void | Initialize () |

| Bool_t | IsInConeTube (EdbSegP *sTest, EdbSegP *sStart, Double_t CylinderRadius, Double_t ConeAngle) |

| void | Print () |

| void | PrintAll () |

| void | PrintMore () |

| void | PrintParameters () |

| void | PrintParametersShort () |

| void | SetActualAlgParameterset (Int_t ActualAlgParametersetNr) |

| void | SetEdbPVRec (EdbPVRec *Ali) |

| void | SetEdbPVRecPIDNumbers (Int_t FirstPlate_eAliPID, Int_t LastPlate_eAliPID, Int_t MiddlePlate_eAliPID, Int_t NumberPlate_eAliPID) |

| void | SetInBTArray (TObjArray *InBTArray) |

| void | SetParameter (Int_t parNr, Float_t par) |

| void | SetParameters (Float_t *par) |

| void | SetRecoShowerArray (TObjArray *RecoShowerArray) |

| void | SetRecoShowerArrayN (Int_t RecoShowerArrayN) |

| void | SetUseAliSub (Bool_t UseAliSub) |

| void | Transform_eAli (EdbSegP *InitiatorBT, Float_t ExtractSize) |

| void | UpdateShowerIDs () |

| void | UpdateShowerMetaData () |

| virtual | ~EdbShowAlg () |

Public Attributes | |

| TString | eLayout |

Private Attributes | |

| Bool_t | eANN_DoTrain =kTRUE |

| Int_t | eANN_EQUALIZESGBG |

| Int_t | eANN_INPUTNEURONS |

| Int_t | eANN_Inputtype |

| Double_t | eANN_Inputvar [24] |

| Int_t | eANN_NHIDDENLAYER |

| Int_t | eANN_NTRAINEPOCHS |

| Double_t | eANN_OUTPUTTHRESHOLD |

| Double_t | eANN_OutputValue =0 |

| Int_t | eANN_PLATE_DELTANMAX |

| TFile * | eANNTrainingsTreeFile |

| TTree * | eANNTree |

| TMultiLayerPerceptron * | eTMlpANN |

| TString | eWeightFileLayoutString |

| TString | eWeightFileString |

Additional Inherited Members | |

Protected Member Functions inherited from EdbShowAlg Protected Member Functions inherited from EdbShowAlg | |

| void | Set0 () |

Protected Attributes inherited from EdbShowAlg Protected Attributes inherited from EdbShowAlg | |

| Int_t | eActualAlgParametersetNr |

| TString | eAlgName |

| Int_t | eAlgValue |

| EdbPVRec * | eAli |

| EdbPVRec * | eAli_Sub |

| Int_t | eAli_SubNpat |

| Int_t | eAliNpat |

| Int_t | eFirstPlate_eAliPID |

| TObjArray * | eInBTArray |

| Int_t | eInBTArrayN |

| Int_t | eLastPlate_eAliPID |

| Int_t | eMiddlePlate_eAliPID |

| Int_t | eNumberPlate_eAliPID |

| Int_t | eParaN |

| TString | eParaString [10] |

| Float_t | eParaValue [10] |

| EdbShowerP * | eRecoShower |

| TObjArray * | eRecoShowerArray |

| Int_t | eRecoShowerArrayN |

| Int_t | eUseAliSub |

Constructor & Destructor Documentation

◆ EdbShowAlg_N3()

| EdbShowAlg_N3::EdbShowAlg_N3 | ( | Bool_t | ANN_DoTrain | ) |

752{

753 // Constructor with Train/Run Switch

755

756 // Reset all:

757 // Calls Set0 from inheriting function, so some values must be reset to NULL

758 // manually, unless a new Set0() function is implemented -- which is not at

759 // the moment:

760 Set0();

761

766

767 // see default.par_SHOWREC for labeling (labeling identical with ShowRec program)

769 eAlgValue=11;

770

771 // Mostly it will be runnung, but can be set now here:

772 eANN_DoTrain=ANN_DoTrain;

773

774 // Init with values according to N3 Alg:

775 Init();

776

778}

TString eWeightFileLayoutString

Definition: EdbShowAlg_NN.h:150

◆ ~EdbShowAlg_N3()

|

virtual |

Member Function Documentation

◆ ClassDef()

| EdbShowAlg_N3::ClassDef | ( | EdbShowAlg_N3 | , |

| 1 | |||

| ) |

◆ Create_NN_ALG_MLP()

| TMultiLayerPerceptron * EdbShowAlg_N3::Create_NN_ALG_MLP | ( | TTree * | inputtree, |

| Int_t | inputneurons | ||

| ) |

892{

894

895 if (gEDBDEBUGLEVEL>2) cout << "EdbShowAlg_N3::Create_NN_ALG_MLP() inputneurons= " << inputneurons << endl;

896

898 cout << "EdbShowAlg_N3::Create_NN_ALG_MLP() WARNING simu tree is NULL pointer. Return NULL."<< endl;

900 }

901

902 // DEBUG START

903 // THIS IS TO BE WRITTEN BETTER, CAUSE THE HANDOVER OF THE PARAMETERS SHOULD

904 // BE BETTER .....

907

908 // TO DO HERE.... TAKE OVER THE CORRECT LAYOUT.

909 // only knowlegde about number of input neurons and hidden layers is needed.

912

913 // Create the layout here:

914 TString layout="";

915 TString newstring="";

916 // ANN Input Layer

918 newstring=Form("eANN_Inputvar[%d],",loop);

919 layout += newstring; // "+" works only with TStrings!

920 }

923 layout += newstring;

924 // Hidden Layers

928 layout += newstring;

929 }

930 // Output Layer, one output neuron

931 newstring="eANN_Inputtype";

932 layout += newstring;

933

934 // Set Layout String as internal variable now:

935 eLayout = layout;

936

937 // DEBUG MESSAGES:

939 cout << "simu->Show(0): " << endl;

940 simu->Show(0);

941

942 // Create the network:

944

946 cout << "EdbShowAlg_N3::Create_NN_ALG_MLP() GetStructure: " << endl;

947 cout << TMlpANN->GetStructure() << endl;

948 }

951}

◆ CreateANNTree()

| void EdbShowAlg_N3::CreateANNTree | ( | ) |

846{

848

850

851 // Variables and things important for neural Network:

855

856 eANNTree->Print();

857

858 // Default, maximal settings. Same plate, Two plates up- downstream connections looking,

859 // that for 4 inputvariables there

860 // plus 4 fixed input variables for BT(i) to InBT connections: 4+5*4 = 24

861 /*

862 eANN_PLATE_DELTANMAX=5;

863 eANN_NTRAINEPOCHS=100;

864 eANN_NHIDDENLAYER=5;

865 eANN_EQUALIZESGBG=0;

866 eANN_OUTPUTTHRESHOLD=0.85;

867 eANN_INPUTNEURONS=24;

868 eANN_Inputtype=1;

869 */

870

871 cout << "DEBUG: Fill Tree with DUMMY variables --- TO BE CHANGED LATER" << endl;

872 for (int i=0; i<10; ++i) {

873 for (int l=0; l<24; ++l) {

874 eANN_Inputvar[l]=gRandom->Uniform();

875 }

876 eANN_Inputtype=i%2;

877 eANNTree->Fill();

878 }

879 eANNTree->Show(0);

881 cout << "DEBUG: Fill Tree with DUMMY variables --- TO BE CHANGED LATER DONE.." << endl;

882

883 //---------

885 return;

886}

◆ Execute()

|

virtual |

eANN_Inputvar[1] = GetdR(InBT, seg);

Reimplemented from EdbShowAlg.

1023{

1025

1028 return;

1029 }

1030

1031 // TO BE DONE HERE:

1032 // FILL THE ROUTINE WITH THE CODE FROM ShowReco PROGRAM

1033 cout << "EdbShowAlg_N3::Execute()...FILL THE ROUTINE WITH THE CODE FROM ShowReco PROGRAM." << endl;

1034

1035

1036 // Create the root file that contains the trainingsfile tree data first,

1037 // otherwise the trees are not connected with the specified file.

1040 }

1041

1042 // Variables and things important for neural Network:

1043 TTree *eANNTrainingsTree = new TTree("TreeSignalBackgroundBT", "TreeSignalBackgroundBT");

1046

1047

1048

1049 EdbSegP* InBT;

1050 EdbSegP* Segment;

1051 EdbSegP* seg;

1052 EdbShowerP* RecoShower;

1053

1054 Bool_t StillToLoop=kTRUE;

1055 Int_t ActualPID;

1056 Int_t newActualPID;

1057 Int_t STEP=-1;

1058 Int_t NLoopedPattern=0;

1060 if (gEDBDEBUGLEVEL>3) cout << "EdbShowAlg_N3::Execute--- STEP for patternloop direction = " << STEP << endl;

1061

1062

1064

1065 //--- Loop over InBTs:

1067

1068 // Since eInBTArray is filled in ascending ordering by zpositon

1069 // We use the descending loop to begin with BT with lowest z first.

1071

1072 // CounterOutPut

1073 if (gEDBDEBUGLEVEL==2) if ((i%1)==0) cout << eInBTArrayN <<" InBT in total, still to do:"<<Form("%4d",i)<< "\r\r\r\r"<<flush;

1074

1075 //-----------------------------------

1076 // 0a) Reset characteristic variables:

1077 //-----------------------------------

1078

1079 //-----------------------------------

1080 // 1) Make eAli with cut parameters:

1081 //-----------------------------------

1082

1083 // Create new EdbShowerP Object for storage;

1084 // See EdbShowerP why I have to call the Constructor as "unique" ideficable value

1085 //RecoShower = new EdbShowerP(i,eAlgValue);

1087

1088 // Get InitiatorBT from eInBTArray

1091

1092 // Clone InBT, because it is modified a lot of times,

1093 // avoid rounding errors by propagating back and forth

1095

1096 // Add InBT to RecoShower:

1097 // This has to be done, since by definition the first BT in the RecoShower is the InBT.

1098 // Otherwise, also the definition of shower axis and transversal profiles is wrong!

1099 RecoShower -> AddSegment(InBT);

1100 cout << "Segment (InBT) " << InBT << " was added to RecoShower." << endl;

1101

1102 // Transform (make size smaller, extract only events having same MC) the eAli object:

1103 // See in Execute_CA for description.

1104 // Transform_eAli(InBT,1400);

1105 Transform_eAli(InBT,2400);

1106

1107 //-----------------------------------

1108 // 2) Loop over (whole) eAli, check BT for Cuts

1109 //-----------------------------------

1110 ActualPID= InBT->PID() ;

1111 newActualPID= InBT->PID() ;

1112

1113 while (StillToLoop) {

1114 if (gEDBDEBUGLEVEL>3) cout << "EdbShowAlg_N3::Execute--- --- Doing patterloop " << ActualPID << endl;

1115

1116 // just to adapt to this nomenclature of ShowRec program:

1117 int patterloop_cnt=ActualPID;

1118

1120

1122

1123 // just to adapt to this nomenclature of ShowRec program

1124 seg=Segment;

1125

1127

1128 // Reset characteristic variables:

1130

1131 // Now calculate NN Inputvariables: --------------------

1132 // Calculate the first four inputvariables, which depend on InitiatorBT only:

1133 // Important: dT, dMindist is symmetric, dR is NOT!

1134 // Do propagation from InBT to seg! (i.e. to downstream segments)

1135 // Loose PreCuts, in order not to get too much BG BT into trainings sample

1137 // Update: It is better to use DistToAxis instead of dR, since dR measures

1138 // only distance to InBT without taking care of the direction.

1139 // (does not matter for relatively straight tracks, but for tracks in direction)

1141 //eANN_Inputvar[1] = GetDistToAxis(InBTClone, seg);

1142 cout << "// TO BE CHECKED WHERE THE FUNCTION GetDistToAxis(InBTClone, seg); IS!!" << endl;

1143 // TO BE CHECKED WHERE THE FUNCTION GetDistToAxis(InBTClone, seg); IS!!

1145

1146 //eANN_Inputvar[2] = GetdeltaThetaSingleAngles(InBT, seg);

1147 // TO BE CHECKED WHERE THE FUNCTION GetdeltaThetaSingleAngles(InBT, seg); IS!!

1148 cout << "// TO BE CHECKED WHERE THE FUNCTION GetdeltaThetaSingleAngles(InBT, seg); IS!!" << endl;

1150

1151 //eANN_Inputvar[3] = GetdMinDist(InBT, seg);

1152 // TO BE CHECKED WHERE THE FUNCTION GetdMinDist(InBT, seg); IS!!

1153 cout << "// TO BE CHECKED WHERE THE FUNCTION GetdMinDist(InBT, seg); IS!!" << endl;

1155 // 1)

1156 // 2) .... // 24)

1157 // TO BE FILLED WITH THE CODE FROM SHOWREC PROGRAMM

1158 // end of calculate NN Inputvariables: --------------------

1159

1160 // ---------------------------------------------------------

1161 // Calculate eANN Output now:

1162 eANN_OutputValue=0;

1163 // Adapt: array params should have as many entries as there are inputvariables.

1164 // This array is larger than possible used array for evaluation.

1165 // The array with the right size is created, when the eANN_INPUTNEURONS

1166 // variable is fixed (TMLP class demands #arraysize = #inputneurons)

1167 // This is a kind of dump workaround, but for now it should work.

1168 Double_t EvalValue=0;

1169 Double_t N3_Evalvar4[4];

1170 Double_t N3_Evalvar8[8];

1171 Double_t N3_Evalvar12[12];

1172 Double_t N3_Evalvar16[16];

1173 Double_t N3_Evalvar20[20];

1174 Double_t N3_Evalvar24[24];

1175

1179 }

1183 }

1187 }

1191 }

1195 }

1196 else {

1199 }

1200 // ---------------------------------------------------------

1201

1202

1203 // Now apply cut conditions: NN Neural Network Alg --------------------

1204 Double_t value=0;

1205

1206 // These conditions have to be calculated, still!!!

1207 cout << "TO BE DONE" << endl;

1208

1213 }

1215 // end of cut conditions: NN Neural Network Alg --------------------

1216

1217

1218

1219 // If we arrive here, Basetrack Segment has passed criteria

1220 // and is then added to the RecoShower:

1221 // Check if its not the InBT which is already added:

1223 ; // is InBT, do nothing;

1224 }

1225 else {

1226 RecoShower -> AddSegment(Segment);

1227 }

1228 cout << "Segment " << Segment << " was added to &RecoShower : " << &RecoShower << endl;

1229 } // of btloop_cnt

1230

1231 //------------

1232 newActualPID=ActualPID+STEP;

1233 ++NLoopedPattern;

1234

1235 if (gEDBDEBUGLEVEL>3) cout << "EdbShowAlg_N3::Execute--- --- newActualPID= " << newActualPID << endl;

1236 if (gEDBDEBUGLEVEL>3) cout << "EdbShowAlg_N3::Execute--- --- NLoopedPattern= " << NLoopedPattern << endl;

1237 if (gEDBDEBUGLEVEL>3) cout << "EdbShowAlg_N3::Execute--- --- eNumberPlate_eAliPID= " << eNumberPlate_eAliPID << endl;

1238 if (gEDBDEBUGLEVEL>3) cout << "EdbShowAlg_N3::Execute--- --- StillToLoop= " << StillToLoop << endl;

1239

1240 // This if holds in the case of STEP== +1

1241 if (STEP==1) {

1243 if (newActualPID>eLastPlate_eAliPID) cout << "EdbShowAlg_N3::Execute--- ---Stopp Loop since: newActualPID>eLastPlate_eAliPID"<<endl;

1244 }

1245 // This if holds in the case of STEP== -1

1246 if (STEP==-1) {

1248 if (newActualPID<eLastPlate_eAliPID) cout << "EdbShowAlg_N3::Execute--- ---Stopp Loop since: newActualPID<eLastPlate_eAliPID"<<endl;

1249 }

1250 // This if holds general, since eNumberPlate_eAliPID is not dependent of the structure of the gAli subject:

1252 if (NLoopedPattern>eNumberPlate_eAliPID) cout << "EdbShowAlg_N3::Execute--- ---Stopp Loop since: NLoopedPattern>eNumberPlate_eAliPID"<<endl;

1253

1254 ActualPID=newActualPID;

1255 } // of // while (StillToLoop)

1256

1257 // Obligatory when Shower Reconstruction is finished!

1258 RecoShower ->Update();

1259 //RecoShower ->PrintBasics();

1260

1261

1262 // Add Shower Object to Shower Reco Array.

1263 // Not, if its empty:

1264 // Not, if its containing only one BT:

1266

1267 // Set back loop values:

1268 StillToLoop=kTRUE;

1269 NLoopedPattern=0;

1270 } // of // for (Int_t i=eInBTArrayN-1; i>=0; --i) {

1271

1272

1273 // Set new value for eRecoShowerArrayN (may now be < eInBTArrayN).

1275

1276 Log(2,"EdbShowAlg_N3::Execute","eRecoShowerArray():Entries = %d",eRecoShowerArray->GetEntries());

1278 return;

1279}

Definition: EdbSegP.h:21

void Transform_eAli(EdbSegP *InitiatorBT, Float_t ExtractSize)

Definition: EdbShowAlg.cxx:107

void SetRecoShowerArrayN(Int_t RecoShowerArrayN)

Definition: EdbShowAlg.h:117

Definition: EdbShowerP.h:28

◆ Finalize()

|

virtual |

Reimplemented from EdbShowAlg.

1292{

1294 cout << "TO BE DONE HERE: DELETE THE UNNECESSARY OBJECTS CREATED ON THE HEAP..." << endl;

1295 return;

1296}

◆ GetWeightFileString()

|

inline |

◆ Init()

797{

799

801 // Init with values according to N3 Alg:

802 // TO BE CHECKED !!

803 // Structure should be equal to the one in file:

804 // PARAMETERSET_DEFINITIONFILE_N3_ALG.root

805 eParaValue[0]=5;

807 eParaValue[1]=100;

809 eParaValue[2]=7;

811 eParaValue[3]=0.8;

813 eParaValue[4]=0;

815 eParaValue[5]=24;

817

818 cout << "DEBUG::AGAIN, WHERE ARE THE ePARAVALUES SET???????" << endl;

819

822

823 // Create Tree where the Variables for the N3 Neural Net are stored:

824 CreateANNTree();

826

827 // Standard Weights:

828 SetANNWeightString();

829 LoadANNWeights();

830

831 return;

832}

TMultiLayerPerceptron * Create_NN_ALG_MLP(TTree *inputtree, Int_t inputneurons)

Definition: EdbShowAlg_NN.cxx:891

◆ Initialize()

|

virtual |

Reimplemented from EdbShowAlg.

◆ LoadANNWeights() [1/2]

| void EdbShowAlg_N3::LoadANNWeights | ( | ) |

975{

977 cout << "EdbShowAlg_N3::SetANNWeightString IS EMPTY. Reset to default string!" << endl;

979 }

981 return;

982}

◆ LoadANNWeights() [2/2]

| void EdbShowAlg_N3::LoadANNWeights | ( | TMultiLayerPerceptron * | TMlpANN, |

| TString | WeightFileString | ||

| ) |

◆ Print()

| void EdbShowAlg_N3::Print | ( | ) |

1000{

1002

1004 cout << "Algorithm method related inputs:" << endl;

1010

1011 cout << "Structure of the Net:" << endl;

1012 cout << eLayout.Data() << endl;

1013

1015 return;

1016}

◆ SetANNWeightString()

| void EdbShowAlg_N3::SetANNWeightString | ( | ) |

957{

961 // TO DO HERE.... TAKE OVER THE CORRECT WEIGHTFILE STRING.

962 cout << "EdbShowAlg_N3::SetANNWeightString() TO DO HERE.... TAKE OVER THE CORRECT WEIGHTFILE STRING. " << endl;

963

964// if (gEDBDEBUGLEVEL>2)

967 return;

968}

◆ SetWeightFileString()

|

inline |

Member Data Documentation

◆ eANN_DoTrain

|

private |

◆ eANN_EQUALIZESGBG

|

private |

◆ eANN_INPUTNEURONS

|

private |

◆ eANN_Inputtype

|

private |

◆ eANN_Inputvar

|

private |

◆ eANN_NHIDDENLAYER

|

private |

◆ eANN_NTRAINEPOCHS

|

private |

◆ eANN_OUTPUTTHRESHOLD

|

private |

◆ eANN_OutputValue

|

private |

◆ eANN_PLATE_DELTANMAX

|

private |

◆ eANNTrainingsTreeFile

|

private |

◆ eANNTree

|

private |

◆ eLayout

| TString EdbShowAlg_N3::eLayout |

◆ eTMlpANN

|

private |

◆ eWeightFileLayoutString

|

private |

◆ eWeightFileString

|

private |

The documentation for this class was generated from the following files:

- /home/antonio/fedra_doxygen/src/libShowRec/EdbShowAlg_NN.h

- /home/antonio/fedra_doxygen/src/libShowRec/EdbShowAlg_NN.cxx